Navigating GCMD Science Keywords: A Researcher's Guide to Effective Data Annotation

This article addresses the significant challenges researchers and data providers face when annotating scientific datasets with keywords from the extensive GCMD Science Keywords controlled vocabulary.

Navigating GCMD Science Keywords: A Researcher's Guide to Effective Data Annotation

Abstract

This article addresses the significant challenges researchers and data providers face when annotating scientific datasets with keywords from the extensive GCMD Science Keywords controlled vocabulary. We explore the foundational hurdles, including the complexity of the hierarchical system and the prevalent issue of insufficient metadata quality. The piece provides actionable methodological guidance for keyword selection and introduces both traditional and AI-driven recommendation tools. Furthermore, it offers troubleshooting strategies for common annotation problems and presents a framework for validating and comparing annotation quality. Designed for scientists and drug development professionals, this guide aims to reduce annotation costs, improve data discoverability, and enhance the overall value of research data portals.

Understanding the GCMD Science Keywords Landscape and Core Annotation Hurdles

Troubleshooting Guides & FAQs

FAQ: Vocabulary Navigation & Retrieval

Q: My search in the GCMD keyword system returns zero results, even though I know relevant terms exist. What are the most common causes?

A: This is typically caused by a mismatch between your search term and the controlled vocabulary's hierarchy. Common causes include:

- Using a synonym: The system uses preferred terms. Your term may be a variant or synonym that is not the official label.

- Incorrect hierarchical context: The term you are using may exist, but only as a child of a broader term. Searching for the child term alone may not retrieve it outside of its hierarchical context.

- Spelling or formatting errors: The vocabulary is case-insensitive but requires exact spelling. Hyphenation and compound terms must be exact.

Q: How do I choose the most specific keyword available without going too narrow for my research data?

A: Utilize the "Broader" and "Narrower" relationship indicators within the GCMD hierarchy. Start with a general term you know is relevant. Examine its "Narrower" terms to see if any more precisely describe your work. The goal is to find the term that is specific enough to be meaningful for discovery but broad enough to accurately encompass your entire dataset. If no single term is perfect, using multiple keywords from the same branch is an accepted practice.

Q: What is the practical difference between a "Theme" keyword and a "Topic" keyword in GCMD, and how does it affect annotation?

A: The "Science Keywords" are a single hierarchy, but they are structured into tiers. The "Theme" represents the highest level of categorization (e.g., "EARTH SCIENCE"), while "Topic" is the next level down (e.g., "BIOSPHERE"). During annotation, you will typically select a leaf node—the most specific term available—which automatically implies its parent Theme, Topic, and other tiers. You do not need to select each tier individually.

Troubleshooting Guide: Annotation Consistency

Problem: Inconsistent keyword assignment across datasets from a multi-institutional project.

Diagnosis: This is a common challenge in collaborative science. It arises from differing interpretations of the vocabulary hierarchy and a lack of a standardized annotation protocol.

Solution: Implement a Project-Level Keyword Convention.

Experimental Protocol: Establishing a Keyword Annotation Standard

- Convene a Annotation Working Group: Assemble key researchers and data managers from all participating institutions.

- Identify Core Research Areas: List the primary scientific domains covered by the project.

- Map to GCMD Vocabulary: For each core area, collaboratively identify the most appropriate GCMD Science Keyword leaf node. Document the full path of each chosen term.

- Create a Project-Specific Guide: Develop a living document (e.g., a shared spreadsheet or wiki) that lists common data types produced by the project and the exact GCMD keywords to be used for each.

- Validate and Revise: Have a small team annotate a sample dataset using the guide. Refine the guide based on ambiguities or gaps discovered.

- Distribute and Train: Share the final guide and conduct a brief training session for all data contributors.

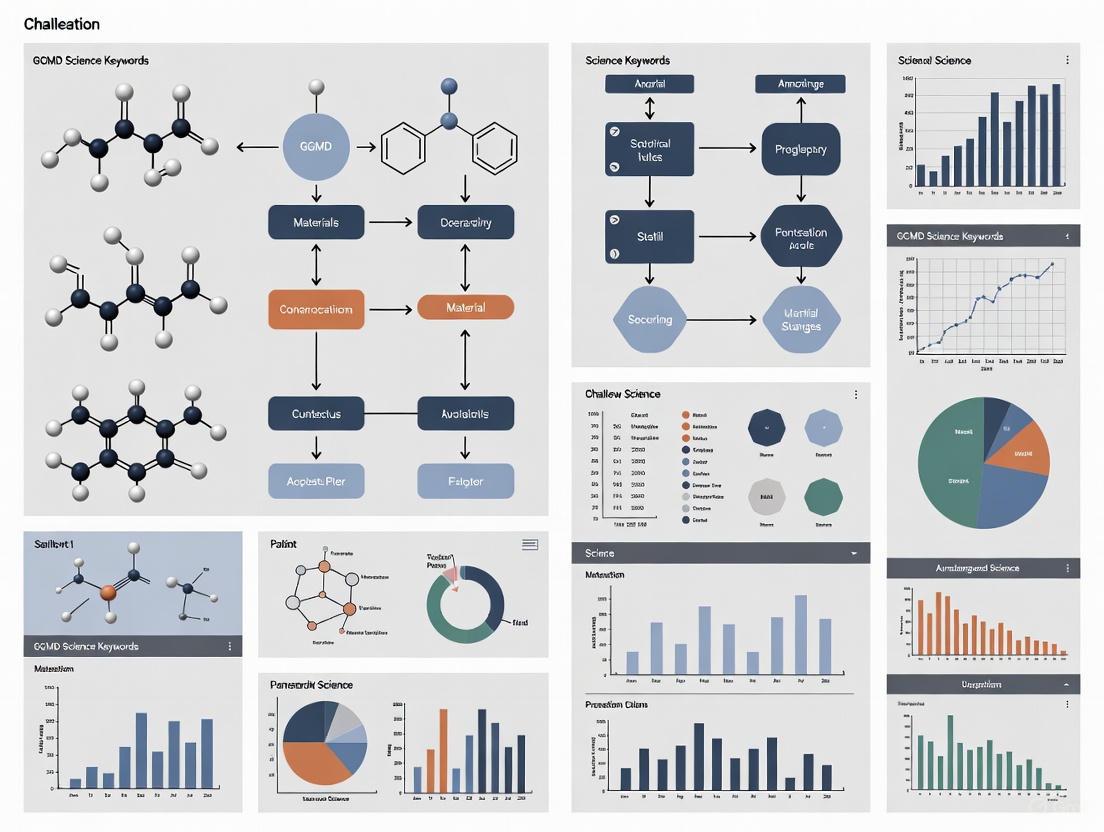

Diagram: Keyword Harmonization Workflow

Data Presentation: Common GCMD Keyword Tiers

| Tier Name | Description | Example |

|---|---|---|

| Theme | The highest level of categorization. | EARTH SCIENCE |

| Topic | A major sub-discipline within the theme. | BIOSPHERE |

| Term | A specific subject area within the topic. | ECOLOGICAL DYNAMICS |

| Variable | A measurable phenomenon. | ECOSYSTEM FUNCTIONS |

| Detailed Variable | The most specific level of the hierarchy. | BIODIVERSITY FUNCTIONS |

FAQ: Vocabulary Updates and Governance

Q: How often is the GCMD keyword vocabulary updated, and how can I request a new term?

A: The GCMD vocabulary is updated on a rolling basis as new scientific disciplines and measurement techniques emerge. Requests for new terms are submitted through the GCMD Community Forum. The process involves proposing the new term, providing a definition, and suggesting its placement within the existing hierarchy. The request is then reviewed by the GCMD governance board and relevant scientific community experts.

Q: What should I do if I cannot find a keyword that accurately describes my research, even after exploring the entire hierarchy?

A: First, consult with colleagues or your institutional data manager to ensure you haven't overlooked a relevant term. If no term is found, you have two options:

- Use the closest broader term: Select the most accurate parent term available. In your dataset's metadata "description" field, explicitly state the specific research focus using free text.

- Propose a new term: Follow the official process on the GCMD Community Forum to propose the addition of a new keyword. This contributes to the community resource.

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Vocabulary Research |

|---|---|

| GCMD Keyword Portal | The primary interface for browsing and searching the hierarchical vocabulary. |

| GCMD Community Forum | Platform for discussing term definitions, reporting issues, and proposing new keywords. |

| ISO 19115/19139 | The international standard for geographic metadata, which the GCMD keywords are designed to complement. |

| JSON-LD API | A machine-readable interface for programmatically accessing the GCMD vocabulary, enabling integration into data management workflows. |

| Project-Specific Annotation Guide | A living document that standardizes keyword choices for a collaborative project, ensuring consistency. |

Data Presentation: Sample Annotation Metrics from a Collaborative Study

| Research Group | Initial Keyword Consistency | Post-Protocol Keyword Consistency | Time Spent on Annotation (per dataset) |

|---|---|---|---|

| Group A (Ecology) | 45% | 92% | 15 min -> 5 min |

| Group B (Oceanography) | 60% | 95% | 20 min -> 7 min |

| Group C (Atmospheric) | 30% | 89% | 25 min -> 8 min |

| Project Average | 45% | 92% | 20 min -> 7 min |

Diagram: GCMD Science Keyword Hierarchical Structure

Technical Support Center

Troubleshooting Guides

Troubleshooting Guide 1: Resolving "Keyword Not Found" Errors During Dataset Annotation

Issue: When annotating your dataset in the Earthdata portal, you cannot find a suitable GCMD Science Keyword to accurately describe your research parameters.

Explanation: The GCMD Science Keywords utilize a controlled, hierarchical vocabulary [1]. Your specific research term may exist at a different level of the hierarchy than expected, or it may be a new concept not yet incorporated into the keyword system.

Step-by-Step Resolution:

- Navigate the Hierarchy: Use the GCMD Keyword Viewer to browse the keyword structure. Begin with a broad Category (e.g., "Earth Science") and progressively drill down through Topic, Term, and Variable levels to locate the most specific match [1].

- Identify the Gap: If a suitable keyword is not found, document the precise term needed and its position within the GCMD hierarchy (e.g., Earth Science > Oceans > Ocean Chemistry > My New Parameter).

- Submit a Formal Request: Access the GCMD Keyword Forum to submit a new keyword request [1] [2]. Provide the short name, long name, and a detailed description for the proposed keyword, as demonstrated in the successful request for the "Arctic Challenge for Sustainability III" project [2].

- Use the Detailed Variable Field: As an interim solution, you can use the most relevant existing keyword and add your specific parameter in the "Detailed Variable" field, which is an uncontrolled field for user-provided specifics [1].

Expected Outcome: The GCMD team will review your request. Once approved and added, the new keyword will be available for all users, enhancing the discoverability of your and others' datasets [2].

Table: GCMD Science Keyword Hierarchy Structure

| Keyword Level | Description | Example |

|---|---|---|

| Category | High-level scientific discipline | Earth Science |

| Topic | Major concept within the discipline | Atmosphere |

| Term | Specific subject area | Weather Events |

| Variable Level 1 | Measured parameter or variable | Subtropical Cyclones |

| Variable Level 2 | More specific classification | Subtropical Depression |

| Detailed Variable | Uncontrolled field for user specifics | (User-defined) |

Troubleshooting Guide 2: Addressing Inconsistent Search Results Due to Legacy Metadata

Issue: Searches for datasets in the Common Metadata Repository (CMR) yield inconsistent or incomplete results, even when using approved GCMD keywords.

Explanation: NASA's EOSDIS is transitioning from legacy metadata standards (like DIF and ECHO) to the international ISO 19115 standard [3]. During this transition, older datasets with legacy metadata may not be fully interoperable with the newer, unified system, affecting search reliability.

Step-by-Step Resolution:

- Verify Metadata Standard: Check the metadata record for the dataset to identify which standard it uses (e.g., DIF, ECHO, or ISO). This information is often available in the metadata header or through the data center portal.

- Broaden Search Strategy: If you suspect a dataset is missing, try using broader or alternative GCMD keywords. Legacy metadata might have been mapped to a different, but related, term during the translation to the Unified Metadata Model (UMM) [3].

- Utilize the UMM: Understand that the Unified Metadata Model acts as a bridge. Your search query using a GCMD keyword is processed through the UMM, which then queries both ISO and translated legacy metadata [3].

- Report the Gap: If a known dataset consistently fails to appear in searches using correct keywords, report the issue to the relevant DAAC or GCMD User Support. This indicates a potential gap in the metadata migration or mapping process that needs manual review [3].

Expected Outcome: A more robust search strategy that accounts for metadata variability. Reporting issues contributes to the ongoing improvement of metadata quality across the portal.

Experimental Protocol: Validating Keyword-Driven Data Discovery Workflow

Objective: To quantitatively assess the impact of metadata evolution on the discoverability of Earth science datasets using GCMD keywords.

Methodology:

- Keyword Selection: Select a set of 20 GCMD Science Keywords representing diverse Earth science domains (e.g., "Ocean Chlorophyll," "Soil Moisture," "Atmospheric Ozone").

- Query Execution: For each keyword, execute an identical search query against the CMR on a monthly basis over a 12-month period.

- Data Collection: For each search, record:

- The total number of datasets returned.

- The percentage of datasets with metadata identified as "ISO 19115 compliant."

- The percentage of datasets with metadata identified as "Legacy (DIF/ECHO)."

- Data Analysis: Calculate the correlation between the adoption of ISO standards and changes in search result consistency and volume over time.

Table: Key Metrics for Data Discovery Validation

| Metric | Measurement Method | Significance |

|---|---|---|

| Search Result Volatility | Standard deviation in dataset count for repeated keyword searches | Indicates stability of the metadata repository. |

| ISO Compliance Rate | Percentage of returned datasets with ISO 19115 metadata | Tracks progress in metadata standardization. |

| Legacy Metadata Prevalence | Percentage of returned datasets using DIF or ECHO standards | Identifies areas requiring metadata migration effort. |

Frequently Asked Questions (FAQs)

Q1: What are GCMD Keywords and why is their consistent annotation critical for my research?

A: GCMD Keywords are a hierarchical set of controlled vocabularies designed to describe Earth science data, services, and variables in a consistent manner [1]. Consistent annotation is critical because it enables precise searching across massive data repositories (like NASA's 9+ petabyte archive), ensures interdisciplinary interoperability, and allows for the accurate aggregation of data from different sources for large-scale studies [1] [3]. Poor annotation directly leads to a "metadata quality crisis," where valuable data becomes hard to find, use, and trust.

Q2: Our project is new and does not exist in the GCMD Project Keywords list. What is the official process to have it added?

A: The process is managed through a community forum. You must submit a formal request on the GCMD Keyword Forum, which is now part of the Earthdata Forum [1] [2]. Your request should include a proposed Short Name (e.g., "ArCS III") and a Project Title/Long Name (e.g., "Arctic Challenge for Sustainability III"), along with a clear description of the project's goals [2]. The GCMD team reviews these requests and, upon approval, adds them to the official list.

Q3: How is NASA addressing the challenge of inconsistent metadata quality across its vast data holdings?

A: NASA is undertaking a multi-pronged approach:

- Adopting International Standards: Mandating the use of ISO 19115 metadata standards for new missions and migrating existing data to this standard [3].

- Implementing a Common Repository: Using the Common Metadata Repository (CMR) as a unified system to manage metadata evolution [3].

- Creating a Unified Model: The Unified Metadata Model (UMM) defines core metadata requirements and bridges the gap between legacy standards and ISO [3].

- Formal Quality Assurance: Implementing automated validation and manual review processes to ensure metadata is consistent and complete [3].

Visualization: GCMD Keyword Annotation and Troubleshooting Workflow

The Scientist's Toolkit: Research Reagent Solutions for Metadata Quality

Table: Essential Components for Robust Metadata Management

| Item / Concept | Function / Explanation |

|---|---|

| GCMD Keyword Viewer | The primary tool for browsing and identifying the correct hierarchical keywords for dataset annotation [1]. |

| Earthdata Forum (GCMD Section) | The official platform for community discussion, asking questions, and submitting new keyword requests [1]. |

| ISO 19115 Standard | The international metadata standard that ensures interoperability and data understanding across global organizations [3]. |

| Common Metadata Repository (CMR) | The authoritative metadata management system for NASA's EOSDIS, which streamlines workflows and improves data quality [3]. |

| Unified Metadata Model (UMM) | A core set of metadata requirements that serves as a bridge between different metadata standards, enabling search and retrieval across legacy and modern systems [3]. |

Frequently Asked Questions

FAQ 1: Why is selecting the right GCMD Science Keywords so difficult? Selecting the right keywords is difficult because it requires extensive knowledge of both your specialized research domain and the vast, complex GCMD controlled vocabulary. The vocabulary contains approximately 3,000 keywords organized in a multi-level hierarchy, making it challenging to find the most specific and appropriate terms for your data [4].

FAQ 2: What are the consequences of poor or minimal keyword annotation? Poorly annotated datasets are harder for others to discover, which reduces the impact and reuse of your research data. It also hinders the association of related datasets and weakens the overall quality of data portals, creating a cycle that makes future keyword recommendation tools less effective [4].

FAQ 3: Is there any help available for the keyword selection process? Yes. The GCMD supports a community-driven process for keyword development and assistance. You can use the GCMD Keyword Forum to ask questions, discuss trade-offs, and submit requests for new keywords if you cannot find a suitable existing term [1] [2].

FAQ 4: What is the difference between the 'direct' and 'indirect' methods of keyword recommendation? The indirect method recommends keywords based on terms used in similar existing datasets. The direct method recommends keywords by matching your dataset's abstract text to the definition sentences of keywords in the vocabulary. The direct method is more reliable when the existing pool of metadata is poorly annotated [4].

Troubleshooting Guides

Problem: I feel overwhelmed by the number of keywords and spend too much time browsing the hierarchy.

Solution: Understand the keyword structure and employ strategic methods.

- Guide:

- Learn the Hierarchy: Familiarize yourself with the standard GCMD Science Keywords structure. It typically follows a six-level path from general to specific [1]:

Category > Topic > Term > Variable > Detailed Variable - Start Broad, Then Narrow Down: Begin with a broad category (e.g., "Earth Science") and systematically drill down through topics and terms. This is more efficient than searching for a specific term from the start.

- Leverage Keyword Recommendation Tools: Emerging tools can reduce your workload. The table below compares two primary methods explored in recent research [4]:

- Learn the Hierarchy: Familiarize yourself with the standard GCMD Science Keywords structure. It typically follows a six-level path from general to specific [1]:

| Method | Description | Pros | Cons |

|---|---|---|---|

| Indirect Method | Recommends keywords based on annotations from similar existing datasets in a metadata portal. | Can leverage collective knowledge from well-annotated datasets. | Highly dependent on the quality and quantity of pre-existing metadata; ineffective if similar datasets are poorly annotated. |

| Direct Method | Recommends keywords by analyzing the abstract of your dataset and matching it to the definition sentences of keywords. | Does not rely on existing metadata; useful for novel research with few similar datasets. | Requires a well-written, informative abstract for your dataset to function accurately. |

Problem: My research is novel, and I cannot find keywords that precisely describe my dataset.

Solution: Use the uncontrolled "Detailed Variable" field and participate in the community process.

- Guide:

- Use the Uncontrolled Field: The GCMD keyword structure includes an optional, uncontrolled "Detailed Variable" field at the end of the hierarchy. Use this to add a specific parameter or measurement name that is not yet in the controlled vocabulary [1].

- Request a New Keyword: If a keyword is fundamentally missing, you can submit a formal request for its addition via the GCMD Keyword Forum. The process is collaborative, and the GCMD team reviews requests for inclusion in the vocabulary [1] [2]. An example of a successfully added project keyword is "ArCS III > Arctic Challenge for Sustainability III" [2].

Problem: I am unsure how many keywords to assign or how specific I should be.

Solution: Aim for a balance of breadth and depth.

- Guide:

- Avoid Under-annotation: Many datasets are annotated with fewer than 5 keywords, which limits their discoverability. Do not stop at a high-level category; try to reach at least the "Term" or "Variable" level [4].

- Annotate for Different Users: Consider the various ways a researcher might search for your data. Include keywords that cover the measured parameters, the geographic location, the platform or instrument used, and the overarching scientific topic.

Experimental Protocols & Data

Quantitative Profile of the Annotation Challenge

The following table summarizes key data points that illustrate the scale of the keyword selection burden, derived from empirical research [4].

| Metric | Value / Finding | Context / Implication |

|---|---|---|

| Total GCMD Science Keywords | ~3,000 keywords | Represents the vast vocabulary a data provider must navigate. |

| Poorly Annotated Datasets (GCMD Portal) | ~25% (approx. 8,183 of 32,731 datasets) | A significant portion of datasets have fewer than 5 keywords, highlighting a widespread issue. |

| Average Keywords per Dataset (DIAS) | ~3 keywords | Suggests widespread under-annotation, far below the vocabulary's potential. |

Protocol: Methodology for Evaluating Keyword Recommendation Systems

Research into solving the keyword burden often involves evaluating automated recommendation methods. The protocol below is adapted from studies comparing the "direct" and "indirect" methods [4].

- Objective: To evaluate the efficacy of keyword recommendation methods in reducing the data provider's annotation burden.

- Inputs:

- Target Dataset: A dataset with metadata (especially an abstract) but no GCMD keywords.

- Controlled Vocabulary: The GCMD Science Keywords with their hierarchical structure and definition sentences.

- Existing Metadata Repository: A collection of previously annotated datasets (for the indirect method).

- Procedure:

- Pre-processing: Clean and preprocess the abstract text from the target dataset and all keyword definitions (for the direct method) or existing metadata (for the indirect method).

- Similarity Calculation:

- For the Direct Method: Calculate the semantic similarity between the target dataset's abstract and the definition sentence of each keyword in the vocabulary.

- For the Indirect Method: Calculate the similarity between the target dataset's abstract and the abstracts of all datasets in the existing repository.

- Keyword Recommendation:

- For the Direct Method: Recommend the top N keywords with the highest similarity scores to their definitions.

- For the Indirect Method: Identify the top M most similar existing datasets and aggregate the keywords they use. Recommend the most frequent keywords from this set.

- Evaluation: Use hierarchical evaluation metrics that assign higher weight to the correct recommendation of specific, lower-level keywords, as these are considered more costly for a human to find.

- Output: A ranked list of recommended keywords for the target dataset, with an accuracy score that reflects the method's performance on hard-to-find terms.

The Scientist's Toolkit: Research Reagent Solutions

The following table details key conceptual "tools" and methods relevant to research focused on improving the GCMD keyword annotation process.

| Tool / Method | Function in Research |

|---|---|

| GCMD Keyword Forum | The primary platform for community discussion, asking questions, and submitting formal requests for new keywords [1] [2]. |

| Hierarchical Evaluation Metrics | Specialized metrics used to assess keyword recommendation systems. They emphasize the accurate suggestion of specific, lower-level keywords, which are more difficult for data providers to manually locate in the vocabulary [4]. |

| Controlled Vocabulary (Thesaurus) | A restricted set of standardized terms (like GCMD Science Keywords) used to ensure consistent description and classification of data, eliminating noise from synonyms and spelling variations [4]. |

| Semantic Similarity Analysis | A computational technique at the heart of the "direct" recommendation method. It measures the likeness in meaning between text (e.g., a dataset abstract) and a keyword's definition [4]. |

| Metadata Quality Assessment | The process of evaluating existing metadata repositories for factors like annotation completeness, which is crucial for determining the viability of the "indirect" recommendation method [4]. |

Keyword Selection Workflow

The diagram below visualizes the logical workflow a data provider faces when annotating data with GCMD keywords, highlighting points of friction and potential assistance from recommendation methods.

This guide addresses the critical challenges researchers face when annotating datasets with Global Change Master Directory (GCMD) Science Keywords. Proper annotation is fundamental for data discovery, integration, and reuse. The FAQs and troubleshooting guides below are designed to help you diagnose and resolve common annotation issues, thereby improving the quality and interoperability of your research data.

Frequently Asked Questions (FAQs)

FAQ 1: What are GCMD Keywords and why are they important for my research? GCMD Keywords are a hierarchical set of controlled vocabularies for consistently describing Earth science data, services, and variables [1]. They are crucial because they enable precise searching of metadata and reliable retrieval of data across different systems and organizations [1]. Many U.S. and international agencies, including NASA EOSDIS Data Centers and NOAA, use them as an authoritative taxonomy [1].

FAQ 2: My dataset doesn't appear in search results on data portals. What could be wrong? This is a classic symptom of poor or incorrect keyword annotation. If your dataset is not tagged with the correct, specific keywords from the GCMD hierarchy, search algorithms will not be able to match it to user queries. This directly hinders data discovery [5].

FAQ 3: Why can't I easily combine my dataset with another that seems to be on a similar topic? Even if datasets are on similar topics, if they are annotated with different or inconsistent keywords, it creates a semantic barrier. This lack of standardized annotation severely compromises interdisciplinary interoperability, making data harmonization and synthesis difficult [6] [5].

FAQ 4: How specific should my GCMD keyword annotation be? You should always aim for the most specific level of the hierarchy that accurately describes your data. The GCMD Earth Science keywords have a structure that goes from broad (Category, Topic) to specific (Term, Variable, Detailed Variable) [1]. Using overly broad keywords reduces discoverability. For example, instead of just

Atmosphere, you should drill down to a specific variable if possible.FAQ 5: Where can I request a new GCMD keyword if none fit my project? New keywords can be proposed through the GCMD Keyword Forum, which is now part of the Earthdata Forum [1]. The process involves community discussion and review by the GCMD team to ensure the integrity and usefulness of the keywords [1] [2].

Troubleshooting Guides

Description: Your published dataset is not being found, downloaded, or cited by other researchers, indicating a potential issue with its visibility in metadata catalogues.

Diagnosis and Solution:

- Audit Your Current Keywords: Compare the keywords you used against the official GCMD Keyword Viewer [1]. Verify they are current and correctly spelled.

- Check Keyword Specificity: Ensure you have used the most specific term available. The table below outlines the hierarchical structure you should follow.

| Annotation Level | Description | Example | Impact of Poor Annotation |

|---|---|---|---|

| Category | Broad scientific discipline | Earth Science |

Data is placed in an overly broad category, making it hard to find. |

| Topic | High-level concept within the discipline | Atmosphere |

|

| Term | Specific subject area | Weather Events |

|

| Variable | Measured parameter | Subtropical Cyclones |

Data discovery becomes imprecise; relevant users cannot find it. |

| Detailed Variable | Uncontrolled, highly specific descriptor | Subtropical Depression Track |

Lacks the granularity needed for precise, automated data retrieval. |

- Verify Project Association: Many data repositories allow you to link your dataset to a specific research project. If your project has a dedicated GCMD keyword (e.g.,

ArCS III > Arctic Challenge for Sustainability III[2]), using it can significantly enhance discoverability within your research community.

Problem: Inability to Integrate Datasets for Analysis

Description: You are unable to computationally combine your dataset with others for cross-disciplinary analysis, often due to semantic inconsistencies.

Diagnosis and Solution:

- Identify Annotation Gaps: The diagram below illustrates a typical annotation workflow and where failures can break the chain of interoperability.

- Adopt Common Data Models (CDMs): For complex data integration tasks, consider using a Common Data Model. CDMs like the Observational Medical Outcomes Partnership (OMOP) model standardize the structure, format, and content of data from different sources, facilitating harmonization [7]. While originating in healthcare, the principle is applicable to Earth science.

Problem: Ambiguous or Outdated Keyword Usage

Description: Uncertainty about which keyword to use, or the discovery that a needed keyword does not exist in the GCMD vocabulary.

Diagnosis and Solution:

- Consult the Governance Guide: The GCMD Keyword Governance and Community Guide Document provides a comprehensive resource for the community, describing the governance structures and processes for reviewing proposed changes [1].

- Engage with the Community: Use the GCMD Keyword Forum to ask questions, discuss trade-offs, and track the status of keyword requests [1]. This is the primary channel for community feedback and ensuring the keywords evolve to meet user needs [5]. A real-world example of this process is the successful addition of the "Arctic Challenge for Sustainability III" project keyword [2].

Experimental Protocol: Systematic Assessment of Annotation Quality

This protocol provides a methodology for evaluating the effectiveness of GCMD keyword annotations within a data repository or for a specific set of datasets, as cited in research on metadata quality.

Objective: To quantitatively and qualitatively measure the impact of annotation quality on data discovery.

Methodology:

- Define a Test Corpus: Select a representative sample of datasets from your repository or research field.

- Extract and Categorize Keywords: For each dataset, extract the assigned GCMD keywords. Categorize them according to the GCMD hierarchy (Category, Topic, Term, Variable) [1].

- Measure Annotation Richness:

- Calculate the average number of keywords per dataset.

- Determine the percentage of datasets that use keywords at the specific "Variable" level or deeper.

- Simulate Search Scenarios: Design a set of test queries that a researcher might use. Execute these queries against your repository's search system.

- Evaluate Precision and Recall:

- Precision: Of the datasets returned by a search, how many are actually relevant?

- Recall: Of all the relevant datasets in the repository, how many were successfully retrieved by the search?

- Correlate Metrics: Analyze the relationship between annotation richness (Step 3) and search performance (Step 5). The hypothesis is that datasets with richer, more specific annotations will have higher recall and precision.

The Scientist's Toolkit: Research Reagent Solutions

The following table details key resources essential for addressing GCMD keyword annotation challenges.

| Item Name | Function / Application | Reference / Source |

|---|---|---|

| GCMD Keyword Viewer | The primary tool for browsing, searching, and accessing the complete hierarchy of controlled vocabularies. | NASA Earthdata Website [1] |

| GCMD Keyword Forum | Official platform for community discussion, asking questions, and submitting requests for new keywords. | Earthdata Forum [1] [2] |

| GCMD Keyword Governance Guide | Document outlining the formal governance structures and processes for maintaining and evolving the keywords. | GCMD Documentation [1] |

| Common Data Models (CDMs) | Standardized data models (e.g., OMOP, i2b2) used to overcome semantic barriers for data harmonization across disparate sources. | Informatics and Biomedical Literature [7] |

| Metadata Management Tool (MMT) | An example of a tool used by organizations to create and manage metadata records that leverage GCMD keywords. | Listed as a user of GCMD Keywords [1] |

Practical Workflows and Tools for Accurate GCMD Keyword Assignment

The Global Change Master Directory (GCMD) Keywords are a hierarchical set of controlled vocabularies developed by NASA to ensure Earth science data, services, and variables are described consistently [1]. They serve as a critical standard for precise metadata annotation, enabling accurate data discovery and retrieval across scientific communities and international organizations [1] [8]. For researchers, particularly in interdisciplinary fields like drug development where environmental data may be relevant, proper keyword annotation is essential for making data findable, accessible, interoperable, and reusable (FAIR).

A Methodological Workflow for Keyword Selection

Selecting the appropriate GCMD keywords requires a systematic approach. The diagram below outlines a step-by-step workflow to guide researchers through this process.

Step 1: Identify Core Metadata Elements

Before selecting keywords, identify the fundamental aspects of your dataset: the primary scientific discipline, geographic scope, temporal coverage, measurement platforms, and relevant projects [9] [10]. This foundational step ensures your keyword selection aligns with your actual research content.

Step 2: Select Earth Science Keywords

Navigate the GCMD Earth Science keyword hierarchy, which follows this structure: Category → Topic → Term → Variable → Detailed Variable [1]. For example:

- Category: Earth Science

- Topic: Atmosphere

- Term: Weather Events

- Variable: Subtropical Cyclones

- Detailed Variable: (Uncontrolled keyword for specificity) [1]

Step 3: Select Location Keywords

Choose location keywords that accurately represent where your research was conducted or applies to [9]. The GCMD Location hierarchy is: Location Category → Location Type → Location Subregion 1 → Location Subregion 2 → Location Subregion 3 → Detailed Location [1]. For example: Continent > North America > United States of America > Maryland > Baltimore [1].

Step 4: Select Temporal Keywords

Temporal keywords help users find data based on collection period or relevance to specific eras. These can include named geological periods (e.g., The Holocene) or specific date ranges (e.g., June 2010) [9]. For detailed geological time scales, use Chronostratigraphic Keywords (Eon > Era > Period > Epoch > Stage) [1].

Step 5: Select Platform and Instrument Keywords

Describe how data was collected using Platform and Instrument keywords. Platform keywords use: Basis → Category → Sub Category [1], while Instrument keywords use: Category → Class → Type → Sub Type [1]. This precisely identifies your data collection methodology.

Step 6: Select Project Keywords

If your research is associated with a formal scientific program, field campaign, or project, select the appropriate project keyword using the Short Name and Long Name (e.g., ArCS III > Arctic Challenge for Sustainability III) [2]. New project keywords can be requested through the GCMD Keyword Forum [11].

Step 7: Supplement with Arbitrary Keywords

When controlled vocabularies lack specificity, supplement with arbitrary keywords for local placenames, uncommon species, or highly specialized concepts [9]. Examples include "Pedro Bay" or "Populus trichocarpa" [9].

Step 8: Review and Validate Selections

Ensure keywords accurately represent your dataset and follow GCMD hierarchies. Use the GCMD Keyword Viewer [1] or validation tools to verify selections before finalizing your metadata record.

The Researcher's Toolkit: GCMD Keyword Categories

The table below details the primary GCMD keyword categories and their applications in scientific research and data annotation.

| Keyword Category | Purpose | Hierarchical Structure | Research Application |

|---|---|---|---|

| Earth Science [1] | Describes scientific discipline and measured variables | Category > Topic > Term > Variable > Detailed Variable | Core subject classification for data discovery |

| Location [9] [1] | Specifies geographic coverage | Location Category > Type > Subregion 1/2/3 > Detailed Location | Enables spatial search and regional studies |

| Temporal [9] [1] | Indicates time period covered | Named periods or date ranges | Supports historical analyses and trend studies |

| Platform/Source [1] | Identifies data collection platform | Basis > Category > Sub Category > Short/Long Name | Critical for methodology assessment |

| Instrument/Sensor [1] | Describes measurement equipment | Category > Class > Type > Sub Type > Short/Long Name | Ensures data comparability and quality control |

| Project [1] [2] | Associates data with research initiatives | Short Name > Long Name | Connects related datasets across studies |

| Data Centers [1] | Identifies responsible organization | Level 0-3 > Short/Long Name | Provides data provenance and contact information |

Frequently Asked Questions (FAQs)

What are the benefits of using controlled vocabularies like GCMD Keywords?

Controlled vocabularies ensure consistent description of Earth science data, enabling precise searching of metadata and improved data retrieval [1]. They allow data to be grouped with similar datasets on a global scale, facilitating interoperability across systems and organizations [9] [8]. This consistency is particularly valuable in interdisciplinary research where standardized terminology enables data sharing and integration across scientific domains [5].

How do I handle situations where the GCMD keywords don't have specific terms for my research?

When GCMD keywords lack specificity, you can supplement your metadata with arbitrary keywords for concepts like local placenames or uncommon species [9]. Additionally, the GCMD system allows for Detailed Variables (in Earth Science) and Detailed Locations, which are uncontrolled fields where users can add more specific terms [1]. For missing terms that should be added to the controlled vocabulary, researchers can submit requests through the GCMD Keyword Forum [1] [11].

What is the process for requesting new GCMD keywords?

New keywords can be requested through the GCMD Keyword Forum, which provides an area for discussion and submission of keyword requests [1]. The process involves submitting a formal request with relevant details (e.g., for a project keyword: short name, title, and description) [11] [2]. Requests are reviewed by the GCMD team, with typical implementation occurring within days for straightforward additions [2].

How are GCMD keywords structured for location information?

GCMD Location Keywords use a five-level hierarchy with an optional sixth uncontrolled field: Location Category → Location Type → Location Subregion 1 → Location Subregion 2 → Location Subregion 3 → Detailed Location [1]. For example: Continent > North America > United States of America > Maryland > Baltimore [1]. This hierarchical structure enables searching at various geographic scales.

Are GCMD keywords only applicable to NASA Earth science data?

While created for NASA Earth science data, GCMD Keywords have been adopted by many international organizations, research universities, and scientific institutions worldwide [1]. These include NOAA, USGS, international space agencies, oceanographic research centers, and environmental organizations [1]. The keywords are republished globally through services like Australia's Research Vocabularies Australia to support broader scientific use [12].

FAQs and Troubleshooting Guides

Frequently Asked Questions (FAQs)

Q1: What are GCMD Science Keywords and why are they important for data annotation? GCMD Science Keywords are a hierarchical set of controlled Earth Science vocabularies that help ensure Earth science data, services, and variables are described consistently. They allow for precise searching of metadata and subsequent retrieval of data, services, and variables. Using the precise definitions from this controlled vocabulary is crucial for accurate data annotation, which in turn enables better data discovery and interoperability across international scientific communities [1].

Q2: What is the governance process for new or modified GCMD Keywords? The GCMD employs a formal governance process for reviewing proposed changes. Users can submit requests via the GCMD Keyword Forum, which provides an area for discussion where participants can ask questions, submit keyword requests, discuss trade-offs, and track request status. This ensures keywords remain relevant and comprehensive in response to user needs [1] [2].

Q3: Are there automated tools to assist with GCMD keyword annotation? Yes. NASA has developed an AI-powered tool called the Global Change Master Directory Keyword Recommender (GKR). Powered by the INDUS language model and trained on 66 billion words from scientific literature, it automates keyword suggestions with greater speed and precision, helping to reduce manual tagging effort and inconsistency [13].

Q4: My dataset involves multiple disciplines. How do I select the correct keywords? The hierarchical structure of GCMD Keywords is designed for this purpose. Start with the broadest relevant "Category" (e.g., "Earth Science"), then drill down to specific "Topic," "Term," and "Variable" levels. The "Direct Method" emphasizes using the official definitions at each level to ensure the chosen keywords precisely match your dataset's content, even when it spans multiple disciplines [1].

Q5: How does the GCMD keyword system handle very specific parameters that aren't listed? The Earth Science Keywords hierarchy includes an option for a seventh uncontrolled field called "Detailed Variable." This allows users to add highly specific parameters not already in the controlled vocabulary to more precisely describe their data, while still maintaining the consistency of the higher-level terms [1].

Troubleshooting Common Annotation Challenges

Problem: Inconsistent keyword assignment among team members leading to poor data discovery.

- Solution: Implement a standard annotation protocol based on the "Direct Method."

Problem: A required keyword is missing from the GCMD vocabulary.

- Solution: Propose a new keyword via the official channel.

Problem: Uncertainty in choosing the correct level of specificity within the keyword hierarchy.

- Solution: Apply a bottom-up selection strategy.

- Identify the most specific "Variable" or "Term" that accurately describes your data.

- Ensure all parent levels (Topic and Category) are also included in your annotation. The GCMD's hierarchical structure is designed to support this precision, allowing users to tag data with a specific "Variable" like "Subtropical Depression Track" while also capturing its broader context under "Atmosphere" and "Weather Events" [1].

Quantitative Data on GCMD Keywords

Table 1: GCMD Earth Science Keyword Hierarchy Structure

| Keyword Level | Description | Example |

|---|---|---|

| Category | Represents a major scientific discipline. | Earth Science |

| Topic | A high-level concept within a discipline. | Atmosphere |

| Term | A subject area within a topic. | Weather Events |

| Variable Level 1 | A measured variable or parameter. | Subtropical Cyclones |

| Variable Level 2 | A more specific variable. | Subtropical Depression |

| Variable Level 3 | An even more detailed parameter. | Subtropical Depression Track |

| Detailed Variable | (Uncontrolled) For user-specific details. | User-defined |

Table 2: Evolution of the AI Keyword Recommender (GKR)

| Feature | Previous Version | Upgraded Version (as of 2025) |

|---|---|---|

| Powered by | Not specified | INDUS language model |

| Keywords Supported | ~457 | Over 3,200 (7x increase) |

| Training Data | ~2,000 metadata records | ~43,000 metadata records |

| Key Technique | Standard training | Focal loss for rare keywords |

Experimental Protocols for Annotation

Protocol 1: Manual Annotation Using the Direct Method

Objective: To accurately annotate a scientific dataset with GCMD Keywords by strictly adhering to official definitions.

Materials:

- GCMD Keyword Viewer website [1].

- Dataset metadata description.

- (Optional) GCMD Keyword Forum account for queries [1].

Methodology:

- Define Scope: Clearly outline the core subject matter, instrumentation, and platform of your dataset.

- Hierarchical Selection:

- Begin with the Earth Science category and select the most appropriate Topic (e.g.,

Oceans). - Drill down to the relevant Term (e.g.,

Ocean Chemistry). - Identify the specific Variable levels that precisely describe your measured parameters (e.g.,

Carbon Dioxide,Partial Pressure).

- Begin with the Earth Science category and select the most appropriate Topic (e.g.,

- Verify Definitions: At each level, consult the official GCMD definition to confirm a match with your data. Do not rely on assumptions.

- Ancillary Keywords: Repeat the process for other keyword categories:

- Platform/Source: Identify the basis (e.g.,

Space-based Platforms), category (e.g.,Earth Observation Satellites), and specific short name (e.g.,Aqua) [1]. - Instrument/Sensor: Classify and name the instrument used (e.g.,

MODIS) [1]. - Project: If applicable, specify the project short name and title (e.g.,

ArCS III>Arctic Challenge for Sustainability III) [2].

- Platform/Source: Identify the basis (e.g.,

- Quality Control: Cross-check annotations against a sample of existing, well-annotated records in your domain.

Protocol 2: Validation Using AI-Assisted Annotation

Objective: To use the GKR tool to generate initial keyword suggestions and validate manual annotations.

Materials:

- Access to the AI-powered Keyword Recommender tool [13].

- A textual description of your dataset (abstract or summary).

Methodology:

- Input Preparation: Compose a clear, concise textual summary of your dataset, highlighting key concepts, variables, and methodologies.

- Tool Execution: Submit the text to the GKR tool for analysis.

- Suggestion Analysis: Review the list of suggested GCMD keywords provided by the AI.

- Comparative Validation: Compare the AI-generated keywords with your manual annotations from Protocol 1.

- Matches reinforce your annotation choices.

- Discrepancies require re-examination of both the official definitions and your dataset description to resolve the conflict.

- Final Selection: Apply the "Direct Method" to the final candidate keywords to ensure definitional accuracy before committing them to your metadata.

Workflow and Relationship Diagrams

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for GCMD Keyword Annotation

| Tool / Resource | Function | Access / Notes |

|---|---|---|

| GCMD Keyword Viewer | The primary reference for browsing and understanding the hierarchical structure and official definitions of all controlled keywords [1]. | Publicly accessible online. |

| Keyword Recommender (GKR) | An AI-powered tool that suggests relevant GCMD keywords based on a textual description of a dataset, streamlining the annotation process [13]. | Integrated into NASA's data platforms. |

| GCMD Keyword Forum | The official channel for the community to ask questions, discuss keyword usage, and submit requests for new keywords or modifications [1]. | Requires a free account. |

| docBUILDER-10 | A metadata authoring tool that helps users create compliant metadata records (DIFs), ensuring all required elements are included for submission to systems like the CMR [14]. | For metadata submitters. |

| Common Metadata Repository (CMR) | The powerful backend metadata system that now serves as the source for GCMD, enabling faster and more robust searches across collection-level metadata [14]. | Underpins search interfaces. |

Frequently Asked Questions (FAQs) on GCMD Keyword Annotation

FAQ 1: What are GCMD Keywords and why are they important for my dataset?

GCMD Keywords are a hierarchical set of controlled Earth Science vocabularies that ensure Earth science data, services, and variables are described consistently [1]. Using them is crucial because they enable the precise searching of metadata and subsequent retrieval of data, services, and variables, making your research data more findable, accessible, and interoperable with other datasets [1] [8]. They are an authoritative vocabulary used by NASA's EOSDIS, NOAA, and many other international agencies and research institutions [1].

FAQ 2: I cannot find a specific keyword for my research topic. What should I do?

The GCMD Keywords are a community resource and are periodically updated. If you cannot find a suitable keyword, you can submit a request for a new keyword via the GCMD Keyword Forum [1] [2]. The process is collaborative and transparent. For instance, a researcher successfully requested the addition of the "Arctic Challenge for Sustainability III" project keyword through this forum [2].

FAQ 3: How is the GCMD Keywords hierarchy structured? I find it confusing.

The structure is multi-level, which allows for precise classification. The main keyword sets have different hierarchical structures. For example, the core "Earth Science" keywords use this framework [1]:

| Keyword Level | Example |

|---|---|

| Category | Earth Science |

| Topic | Atmosphere |

| Term | Weather Events |

| Variable Level 1 | Subtropical Cyclones |

| Variable Level 2 | Subtropical Depression |

| Detailed Variable | (Uncontrolled Keyword) |

Other keyword sets, like those for Instruments, use a different hierarchy, such as Category > Class > Type > Sub Type before specifying the instrument's Short Name and Long Name [1].

FAQ 4: Are there best practices for writing a README file that incorporates GCMD Keywords?

Yes. When creating a README file for your data, it is a best practice to use terms from standardized vocabularies like the GCMD Keywords for your discipline's geospatial and scientific keywords [15]. This enhances consistency and reusability. The recommended minimum content for a data README includes general information (like title and PI), data and file overview, sharing and access information, methodological information, and data-specific information for each dataset [15].

Troubleshooting Common GCMD Keyword Annotation Challenges

Challenge 1: Selecting the Appropriate Level of Specificity from the Hierarchy

- Problem: A user is annotating data from a Moderate-Resolution Imaging Spectroradiometer (MODIS) but is unsure how to fully represent the instrument and the measured science parameters.

- Solution:

- Identify Core Components: Break down your dataset into core components: the platform/source (e.g., the satellite), the instrument/sensor, and the science variables measured.

- Leverage Multiple Keyword Sets: Use the appropriate keyword structure for each component. Do not try to fit everything into the "Earth Science" keywords.

- Follow the Hierarchy: Navigate from the general to the specific for each component.

The table below outlines the methodology for applying these keywords correctly.

Table: Experimental Protocol for Hierarchical Keyword Annotation

| Step | Action | Example: MODIS Ocean Color Data |

|---|---|---|

| 1 | Define the Science Discipline | Use Earth Science > Oceans > Ocean Optics > Ocean Color [1]. |

| 2 | Identify the Platform | Use Platforms > Space-based Platforms > Earth Observation Satellites > Terra (EOS AM-1) [1]. |

| 3 | Specify the Instrument | Use Instruments > Earth Remote Sensing Instruments > Passive Remote Sensing > Spectrometers/Radiometers > Imaging Spectrometers/Radiometers > MODIS [1]. |

| 4 | Detail Data Resolutions | Use the relevant range keywords, e.g., Temporal Data Resolution: 1 day - < 1 week [1]. |

Challenge 2: Managing Evolving Keywords and Standards

- Problem: A research group finds that a keyword they have been using is deprecated or changed in a new release of the GCMD Keywords.

- Solution:

- Acknowledge Keyword Evolution: Understand that the GCMD Keywords have evolved over 35 years through an agile process connected with the community [5]. Changes are made to maintain relevancy.

- Implement a Metadata Review Protocol: Establish a routine (e.g., annual) to check the version of the GCMD Keywords used in your metadata and update annotations when new data is published or repositories are upgraded.

- Version Your Annotations: Keep a record of which version of the GCMD Keywords was used for annotating your datasets to ensure reproducibility.

Challenge 3: Ensuring Interoperability with Other Metadata Standards

- Problem: Your institution's data repository requires ISO 19115 metadata, but you want to leverage the simplicity and domain-specificity of GCMD Keywords.

- Solution:

- Understand Cross-Walks: GCMD Keywords are designed for interoperability. NASA's Unified Metadata Model (UMM) provides a cross-walk for mapping between the CMR-supported metadata standards, including GCMD DIF and ISO 19115 [16].

- Use Keywords within Broeder Standards: GCMD Keywords are a recommended metadata standard within NASA's Earth Science Data Systems and can be incorporated into other standards-based metadata records [16]. They are not a replacement for, but a component of, comprehensive metadata.

Table: Key Research Reagent Solutions for Data Annotation

| Item Name | Function |

|---|---|

| GCMD Keyword Viewer | The primary tool for browsing and discovering the complete, up-to-date hierarchy of GCMD Keywords [1]. |

| GCMD Keyword Forum | The official channel for asking questions, discussing trade-offs, and submitting requests for new keywords [1] [2]. |

| Data README Template | A guide for creating a comprehensive readme file, which is a best practice for data sharing and a natural place to document your keyword choices [15]. |

| NetCDF CF Conventions | A critical standard for naming and describing data in netCDF files, often used in conjunction with GCMD Keywords for full data description [16]. |

| Community Governance Guide | A document outlining the formal process for reviewing and updating the keywords, providing insight into how the standard is maintained [1]. |

Experimental Workflow and Data Relationships

The following diagram illustrates the logical workflow and decision process for annotating a dataset using the indirect method of learning from existing, well-annotated examples.

Diagram 1: GCMD Keyword Annotation Workflow (76 characters)

The second diagram depicts the hierarchical structure of the GCMD Keywords, showing the relationship between the different keyword sets and how they collectively describe a scientific data collection.

Diagram 2: GCMD Keyword Set Relationships (76 characters)

The NASA Global Change Master Directory (GCMD) Keywords are a hierarchical set of controlled Earth Science vocabularies that ensure earth science data, services, and variables are described consistently [1]. This system provides a standardized framework for cataloging NASA Earth science and related data, with keywords being adopted by numerous international organizations and research institutions [1] [5].

Researchers face significant challenges in manually applying these complex keyword hierarchies to datasets. The GCMD Earth Science Keywords alone utilize a six-level structure with an optional seventh uncontrolled field (Category > Topic > Term > Variable Level 1 > Variable Level 2 > Variable Level 3 > Detailed Variable) [1]. This complexity, combined with the need for precise annotation to make data findable, accessible, interoperable, and reusable (FAIR), has driven the development of semi-automated solutions that can assist researchers in the annotation process while maintaining compliance with community standards.

Semi-Automated Annotation: A Case Study in Coral Bleaching Research

The development of a semi-automated CoralNet Bleaching Classifier by NOAA's Pacific Islands Fisheries Science Center represents a successful implementation of semi-automated annotation for a specific scientific domain [17]. This project addressed the pressing need to efficiently monitor increasing coral bleaching events across the Hawaiian Archipelago.

Key Experimental Protocol:

- Objective: Develop a tool to quickly and accurately quantify coral bleaching extent from digital imagery

- Timeframe: 2014-2019, encompassing three mass coral bleaching events

- Location: Hawaiian Archipelago, including Main Hawaiian Islands and Northwestern Hawaiian Islands

- Imagery Sources: Multiple sources including NOAA ESD surveys, Papahanoumokuakea National Monument (2014), and The Nature Conservancy of Kaneohe Bay (2015)

- Annotation Platform: CoralNet online platform (https://coralnet.ucsd.edu/)

Research Reagent Solutions

Table: Essential Research Components for Semi-Automated Annotation

| Component | Function | Implementation in Case Study |

|---|---|---|

| CoralNet Platform | Web-based annotation tool and classifier development | Primary platform for annotation and classifier deployment [17] |

| Training Imagery | Representative dataset for machine learning | Benthic images from Hawaiian Archipelago (2014-2019) [17] |

| Annotation Label Set | Controlled vocabulary for consistent labeling | Custom labelset defining short code annotations for coral bleaching [17] |

| Human Annotations | Ground truth data for training and validation | Point-level labels assigned by human annotators on training imagery [17] |

| CoralNet API | Programmatic access for classification | Enables automated classification of new images using the trained classifier [17] |

Technical Challenges and Troubleshooting Guide

Common Implementation Challenges

Challenge 1: Training Data Quality and Quantity

- Symptoms: Poor classifier accuracy, inconsistent results across image types

- Root Cause: Insufficient or unrepresentative training imagery

- Solution: The NOAA team utilized diverse imagery sources spanning multiple years (2014-2019) and locations across the Hawaiian Archipelago to ensure robust training data [17]

Challenge 2: Annotation Consistency

- Symptoms: High variability in human vs. machine annotation comparisons

- Root Cause: Inconsistent application of annotation labels by human annotators

- Solution: Implementation of standardized annotation protocols and label definitions, with targeted (non-random) points for critical features [17]

Challenge 3: Integration with Existing Workflows

- Symptoms: Resistance to adoption, workflow disruption

- Root Cause: Semi-automated tools not aligning with established research practices

- Solution: The CoralNet platform maintained familiar annotation interfaces while gradually introducing automation, and provided API access for integration with existing systems [17]

Accuracy Validation Methodology

The NOAA team implemented a rigorous validation protocol to assess classifier performance:

Table: Accuracy Assessment Metrics for CoralNet Bleaching Classifier

| Validation Level | Assessment Method | Implementation in Case Study |

|---|---|---|

| Point-level | Direct comparison of machine-generated vs. human-annotated labels for each point | CSV files containing point-level comparisons for all test imagery [17] |

| Site-level | Aggregate accuracy measures across entire survey sites | Analysis of percent bleaching cover estimates at site level [17] |

| Temporal | Performance consistency across different sampling years | Imagery spanning 2014-2019 with varying bleaching conditions [17] |

| Spatial | Geographic transferability across different reef systems | Testing across multiple locations in Hawaiian Archipelago [17] |

Frequently Asked Questions (FAQs)

Q1: How does semi-automated annotation specifically address GCMD keyword challenges? Semi-automated tools help researchers apply complex GCMD keyword hierarchies consistently by providing guided annotation frameworks. The CoralNet implementation demonstrates how domain-specific classifiers can standardize annotations according to community standards, which aligns with FAIR data principles that require metadata to meet "domain-relevant community standards" [18].

Q2: What is the typical accuracy trade-off with semi-automated approaches? The CoralNet project maintained rigorous validation where "machine generated labels for these points were then compared against the human generated labels" [17]. This approach allows researchers to quantify and monitor accuracy trade-offs while still benefiting from significantly increased processing speed.

Q3: How can researchers implement similar semi-automated approaches for their specific domains? The methodology follows a replicable pattern: (1) assemble comprehensive training datasets with human annotations, (2) utilize existing platforms like CoralNet or develop custom classifiers, (3) implement rigorous validation protocols comparing machine vs. human performance, and (4) deploy with API access for integration into research workflows [17].

Q4: What computational resources are required for implementing semi-automated annotation? Platforms like CoralNet provide the computational infrastructure, significantly lowering barriers to entry. The NOAA team leveraged the existing CoralNet platform rather than building custom infrastructure, demonstrating how researchers can implement semi-automated solutions without extensive computational resources [17].

Q5: How does semi-automated annotation integrate with existing data management workflows? The CoralNet implementation shows successful integration through multiple pathways: the platform provides API access for programmatic classification, supports standard data formats (CSV, JPEG), and generates outputs compatible with further analysis. This enables researchers to incorporate semi-automated steps into existing workflows rather than requiring complete workflow overhaul [17].

Integration with GCMD and FAIR Data Principles

The development of semi-automated annotation tools directly supports the implementation of FAIR (Findable, Accessible, Interoperable, and Reusable) data principles. As noted in research on metadata standards, "the FAIR principles require metadata to be 'rich' and to adhere to 'domain-relevant' community standards" [18]. Semi-automated tools address both requirements by enabling comprehensive annotation while maintaining consistency with standards like GCMD keywords.

The GCMD keyword system itself has evolved through community-driven processes, with the GCMD Keyword Forum allowing users to "ask questions, submit keyword requests, discuss trade-offs, and track the status of keyword requests" [1]. This collaborative approach mirrors the iterative development of semi-automated annotation tools, where researcher feedback continuously improves classifier performance and utility.

For researchers working with environmental data, the NOAA Omics Data Management Guide specifically recommends using GCMD keywords: "if there is a field for keywords we recommend using a controlled vocabulary such as the Omics terms in the NASA Global Change Master Directory (GCMD)" [19]. Semi-automated annotation tools can facilitate this recommendation by incorporating GCMD vocabularies directly into their classification frameworks.

Solving Common GCMD Annotation Problems and Optimizing for Efficiency

Overcoming the 'Chicken-and-Egg' Dilemma in Metadata Ecosystems

Frequently Asked Questions (FAQs)

Q1: What is the GCMD and why is using its science keywords important for my research data? The Global Change Master Directory (GCMD) provides a hierarchical set of controlled Earth Science vocabularies [1]. Using these keywords ensures that Earth science data, services, and variables are described consistently, allowing for precise searching of metadata and subsequent retrieval of data [1]. This standardization is crucial for making your data discoverable and usable by the broader scientific community, including platforms like NASA's Earthdata Search [1] [20].

Q2: I am annotating my dataset. What is the minimum required structure for a valid GCMD Science Keyword? A valid Science Keyword requires at least three levels: Category > Topic > Term [1] [21]. For example, "Earth Science > Atmosphere > Weather Events" is a valid, complete keyword. The system will fail to validate your metadata if you provide only a Category and Topic (e.g., "EARTH SCIENCE > Atmosphere") or use an incomplete keyword from another domain, such as a Project keyword, in the science keyword field [21].

Q3: My ingestion request returned a '200 OK' success message, but my dataset is not appearing in searches. What could be wrong? A successful HTTP response confirms the file was received, but the metadata may have failed background indexing due to content errors [21]. The most common cause is an invalid science keyword structure that does not meet the "Category > Topic > Term" requirement [21]. Check your ingestion logs for validation errors related to keyword formatting.

Q4: How can I correctly represent different types of keywords, like 'Project' names, in my metadata?

Different keyword types must be specified using the correct <gmd:type> code in your metadata schema [21]. Science Keywords use the type "theme", while Project Keywords use the type "project" [21]. Mislabeling a Project keyword (e.g., "MEaSUREs") as a "theme" type is a common error that can lead to ingestion and indexing problems [21].

Q5: Are there tools to help me assign the correct GCMD keywords automatically? Yes. NASA's Office of Data Science and Informatics has developed the GCMD Keyword Recommender (GKR), an AI tool powered by the INDUS language model [20] [13]. It analyzes your dataset's metadata and automatically suggests precise, standardized keywords from the over 3,200 available terms, reducing manual effort and improving accuracy [20] [13].

Troubleshooting Guides

Issue: Metadata Ingestion Succeeds but Dataset is Not Discoverable

This indicates a problem that occurs after the initial file acceptance, typically during the metadata indexing phase.

Diagnosis and Resolution Steps:

- Verify Keyword Structure: Open your metadata file and locate the science keywords. Ensure every science keyword follows the

Category > Topic > Termhierarchy. Check for typos or missing elements in the keyword string [21]. - Confirm Keyword Type Codes: Inspect the XML of your metadata. For every set of keywords, verify that the

<gmd:MD_KeywordTypeCode>correctly identifies the keyword type. Science keywords must be labeled withcodeListValue="theme"[21].- Incorrect: A Project keyword (e.g., "MEaSUREs") tagged as a "theme".

- Correct: A Science keyword (e.g., "EARTH SCIENCE > Cryosphere > Glaciers/Ice Sheets") tagged as a "theme", and a Project keyword tagged as "project" [21].

- Check for Ingestion Logs: Access your provider's ingestion logs in the system (e.g., CMR) to look for specific validation error messages that occurred after the initial "200 OK" response. These logs often pinpoint the exact keyword causing the indexing failure [21].

Issue: Handling "Rare" or Highly Specific Scientific Concepts

The GCMD controlled vocabulary may not contain every highly specific or new scientific term.

Diagnosis and Resolution Steps:

- Identify the Broadest Applicable Standard Term: Use the GCMD Keyword Viewer to find the most specific available term that encompasses your concept. For example, if "microzooplankton" is not available, use the approved term "zooplankton" [9].

- Supplement with Arbitrary Keywords: Most systems allow you to add uncontrolled "Arbitrary Keywords" to your metadata record [9]. Add your specific terms (e.g., "microzooplankton") here. While these won't be part of the global controlled vocabulary, they will be searchable within your host repository.

- Submit a Keyword Request: If a critical keyword is missing, engage with the community. The GCMD Keyword Forum provides an area for users to discuss and submit requests for new keywords, ensuring the vocabulary evolves with scientific needs [1].

Issue: Inconsistent Keyword Annotation Across a Large Team

Manual keyword assignment can lead to inconsistencies, reducing the effectiveness of data discovery.

Diagnosis and Resolution Steps:

- Adopt the AI Keyword Recommender (GKR): Implement the use of NASA's GKR tool within your team's workflow to standardize the initial keyword assignment process and reduce human error [20] [13].

- Establish Internal Annotation Guidelines: Create a simple internal protocol document that defines:

- The minimum number of science keywords per dataset.

- The specific GCMD branches most relevant to your field.

- A standard process for adding arbitrary keywords.

- Implement a Peer-Review Check: Before final submission, have a second team member review the assigned keywords against the original data documentation to ensure consistency and accuracy.

Quantitative Data on GCMD and Annotation Tools

The following tables summarize key information about the GCMD system and the AI tools that support it.

Table 1: GCMD Keyword Structure Overview

| Keyword Category | Hierarchy Structure | Required Levels | Example |

|---|---|---|---|

| Earth Science [1] | Category > Topic > Term > Variable > Detailed Variable | Category, Topic, Term [21] | Earth Science > Atmosphere > Weather Events |

| Projects [1] | Short Name > Long Name | Short Name | Short Name: ESIP |

| Instruments [1] | Category > Class > Type > Sub Type > Short Name > Long Name | Short Name | Short Name: MODIS |

| Location [1] | Location Category > Type > Subregion 1 > Subregion 2 > Subregion 3 | Location Category, Type | Continent > North America |

Table 2: GCMD Keyword Recommender (GKR) Evolution

| Feature | Original GKR | Upgraded GKR (Powered by INDUS) |

|---|---|---|

| Keyword Coverage [20] [13] | ~430 keywords | >3,200 keywords (7x increase) |

| Training Data [20] | ~2,000 metadata records | ~43,000 metadata records |

| Core Technology [20] [13] | Not specified | INDUS language model (66 billion words) |

| Key Technique for Rare Keywords [20] [13] | Cross-entropy loss | Focal loss |

Experimental Protocols for Metadata Annotation

Protocol 1: Validating GCMD Science Keyword Structure in ISO 19115 XML

This protocol ensures your science keywords are correctly formatted and typed in your metadata file before submission.

- Extract Keyword Strings: Open your ISO 19115 XML metadata file. Locate all

<gmd:descriptiveKeywords>blocks. - Identify Science Keywords: Within each block, find the

<gmd:type>element and confirm it contains<gmd:MD_KeywordTypeCode codeListValue="theme">. This identifies the block as containing science keywords [21]. - Parse Hierarchy: For each

<gco:CharacterString>inside the identified science keyword block, parse the keyword string. It must contain at least two ">" delimiters, creating a three-part hierarchy (Category > Topic > Term) [1] [21]. - Cross-Reference with Validator: Use the GCMD Keyword Viewer to verify that the exact combination of Category, Topic, and Term exists in the official directory [1].

Protocol 2: Utilizing the AI-Powered GCMD Keyword Recommender

This protocol outlines the steps to use NASA's AI tool for efficient and accurate keyword assignment.

- Prepare Input Metadata: Compile a text description of your dataset. Include the title, summary, key variables measured, instruments used, and research objectives.

- Access the Tool: Navigate to the GKR interface within the NASA Earthdata ecosystem.

- Submit for Analysis: Input your prepared dataset description into the tool.

- Evaluate Recommendations: The GKR will return a list of suggested GCMD keywords. The model is trained to handle "rare" keywords, providing accurate suggestions even for niche concepts [20] [13].

- Select and Export: Review the suggested keywords for relevance. Select the appropriate ones and integrate them into your dataset's metadata record.

Visualization of Workflows

Diagram 1: Metadata annotation and discovery ecosystem flow.

Diagram 2: Troubleshooting workflow for metadata indexing failures.

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Resources for GCMD Metadata Annotation

| Item | Function |

|---|---|

| GCMD Keyword Viewer [1] | The official web interface to browse and search the entire hierarchy of controlled vocabularies. |

| GCMD Keyword Recommender (GKR) [20] [13] | An AI tool that suggests relevant GCMD keywords based on a textual description of your dataset, streamlining annotation. |

| ISO 19115 Schema Guide | A reference document for the correct XML schema implementation, ensuring technical compliance for keywords and other metadata elements [21]. |

| GCMD Keyword Forum [1] | A community platform to ask questions, discuss trade-offs, and submit requests for new keywords. |

| Common Metadata Repository (CMR) | NASA's central metadata repository that ingests, validates, and indexes collection-level metadata, powering search clients like Earthdata Search [20] [21]. |

Selecting Specific Lower-Level Terms vs. Broad Upper-Level Categories

Frequently Asked Questions (FAQs)

FAQ 1: What is the fundamental structure of the GCMD Science Keywords?

The GCMD Keywords are a hierarchical set of controlled vocabularies designed to describe Earth science data in a consistent manner [1]. The Earth Science keywords follow a multi-level hierarchy:

- Category: The broadest discipline (e.g., Earth Science).

- Topic: A high-level concept within the discipline (e.g., Atmosphere).

- Term: A specific subject area (e.g., Weather Events).

- Variable Level 1: A measured parameter or variable (e.g., Subtropical Cyclones).

- Variable Level 2 & 3: More specific classifications of the variable.

- Detailed Variable: An uncontrolled field for user-specific descriptions [1].

FAQ 2: Why is selecting the most specific, applicable keyword important for my research?

Using the most specific keyword possible significantly enhances data discoverability for yourself and other researchers. Precise tagging ensures that datasets appear in filtered searches for niche topics and improves the performance of AI-based search and recommendation tools, such as NASA's GCMD Keyword Recommender (GKR), which relies on well-tagged metadata to function accurately [20] [13].

FAQ 3: What should I do if the GCMD controlled vocabulary does not contain a term specific enough for my dataset?

If a specific term is not available, you should select the narrowest available term that still accurately describes your data from the controlled vocabulary. You can then supplement this with a more precise description in the "Detailed Variable" field, which is an uncontrolled field for such cases [1] [9]. Some systems also allow the use of "Arbitrary Keywords" for local or uncommon terms not in the official directory [9].

FAQ 4: How is NASA addressing the challenge of consistent keyword annotation?

NASA's Office of Data Science and Informatics has developed an AI tool called the GCMD Keyword Recommender (GKR) [20] [13]. This tool uses the INDUS language model—trained on 66 billion words from scientific literature—to automatically suggest relevant keywords from the over 3,200 available terms [20] [13]. It employs techniques like focal loss to handle rare keywords effectively, reducing the manual burden on scientists and improving metadata consistency [20].

Troubleshooting Guides

Problem: I cannot find a keyword that precisely matches a specific measurement in my dataset.

| Step | Action | Rationale & Additional Notes |

|---|---|---|

| 1 | Use the GCMD Keyword Viewer to navigate the hierarchy. | Start with a broad category and drill down to the most specific available term. |

| 2 | Identify the closest broader term. | For example, if your study is on "microzooplankton" but only "zooplankton" is available, select "zooplankton" [9]. |

| 3 | Utilize the Detailed Variable field. | Add the specific term "microzooplankton" in this uncontrolled field to provide necessary detail [1]. |

| 4 | (If applicable) Use the Arbitrary Keywords field in your system. | This is a system-dependent option for adding non-GCMD keywords like local place names or uncommon species [9]. |